|

About Topic In Short: |

|

|

|

Who: Institute Name: Carnegie Mellon University. Authors: Chris McComb and Glen

Williams |

|

What: Design

for Artificial Intelligence (DfAI) Framework for integrating AI into

engineering design from the beginning, enabling breakthrough improvements in

technology development. |

|

|

How: The

DfAI framework comprises three key components - DfAI Personnel, DfAI

Applications, and DfAI Framework, addressing AI literacy, engineering system

redesign, and enhancing AI development in engineering. |

|

Introduction:

This

insightful piece delves into the revolutionary "Design for Artificial

Intelligence" (DfAI) framework, a collaborative masterpiece crafted by

ingenious minds at Carnegie Mellon University and Penn State University. It

delves into the complexities involved in seamlessly integrating AI into

engineering design, underscoring the indispensable role that engineers must

play in grasping the specialized domain of DfAI.

The

Genesis of the DfAI Framework:

The

genesis of the DfAI framework can be traced back to an epiphany that struck the

researchers amidst the ever-evolving landscape of engineering design and

manufacturing. They confronted the undeniable truth that there was a dearth of

engineers equipped with expertise in both engineering system design and

artificial intelligence. Thus, recognizing the immense possibilities that AI

held for engineering design, they embraced the challenge of creating a

distinctive discipline to unlock unprecedented advancements.

The

Visionaries: Chris McComb and Glen Williams:

The

pioneers at the helm of the DfAI framework's inception were none other than the

illustrious Chris McComb and the trailblazer Glen Williams. As an associate

professor of mechanical engineering, Chris McComb ardently emphasized the

imperative of entwining AI into the very fabric of the engineering design

process, rather than viewing it as a mere appendage to existing systems.

Glen

Williams, a former protégé of McComb and now the principal scientist at

Re:Build Manufacturing, eloquently exemplified the framework's significance

through a hypothetical scenario of two companies engaged in mass-producing

electric aircraft. While Company A opted for the traditional manual approach to

hasten market entry and profitability, Company B embarked on a data-rich

journey, capturing intelligence throughout the design's lifecycle. With time,

Company B's data-driven paradigm resulted in monumental cost reductions and an

unparalleled competitive edge over Company A.

The

Pillars of the DfAI Framework:

At the

core of the DfAI framework stand three pillars that are critical to its

success: engineering designers, design repository curators, and AI developers.

These roles are intertwined and synergistic, with engineering designers acting

as adept problem solvers, proficient in both engineering constraints and AI

algorithms. Design repository curators take on the mantle of database maintainers,

armed with extensive engineering design and manufacturing acumen, providing

design engineers with invaluable data management tools that cater to both

current and future demands. In parallel, AI developers thrive as visionaries,

envisioning, creating, marketing, and incessantly refining AI software products

that empower design engineers to soar to unprecedented heights.

The

Boundless Applications of DfAI:

The DfAI

framework transcends the confines of any particular engineering discipline,

resonating across the vast expanse of the engineering design process. It

unfurls a path to progress, with its compass pointing toward three cardinal

directions: elevating AI literacy in the industry, reimagining engineering

systems to seamlessly accommodate AI integration, and fostering the evolution

of the engineering AI development process.

Thus

Speak Authors/Experts:

Chris

McComb passionately advocates for AI integration at the very core of design

engineers' operations, negating any notion of AI being an afterthought. He

firmly believes that the future of design and manufacturing rests upon

empowering engineers with cutting-edge AI-integrated software.

Glen

Williams underscores the significance of laying the foundation through

comprehensive frameworks, unified terminology, and well-documented principles.

Such endeavors foster an interconnected community of AI engineers hailing from

diverse engineering applications, industries, technologies, and scales of

operation, poised to collaborate in unparalleled ways.

Conclusion:

The DfAI

framework signifies an epoch-making milestone in engineering design and AI

integration. A testament to collaborative research and visionary insight, it

paves the way for illuminating discussions on the future of AI in engineering.

With the adoption of DfAI principles, industries stand to unlock transformative

advancements, giving birth to a new era of innovative, sustainable, and

immensely profitable technology.

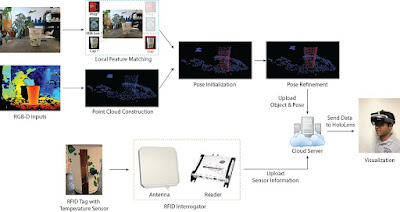

Image

Gallery

|

|

DfAI Principles (Credit: Carnegie Mellon Unviersity, College of Engineering) |

|

|

|

All Images Credit: from References/Resources

sites [Internet] |

Chris McComb presents this work via the ASME Journal of Computing & Information Science in Engineering in the following video.

Hashtag/Keyword/Labels list:

#DfAI #ArtificialIntelligenceEngineering

#EngineeringDesign #AIIntegration #BreakthroughImprovements

#CarnegieMellonUniversity #PennStateUniversity #ChrisMcComb #GlenWilliams

#DataDrivenDesign #AIEngineers #InterconnectedCommunity #AdditiveManufacturing

#Aerospace #MedicalDevices #IoT #SmartDevices

References/Resources:

1. https://engineering.cmu.edu/news-events/news/2022/12/22-dfai.html

2. https://www.pressreader.com/india/electronics-for-you-express/20230203/282742000948776

3. ASME Journal of

Computing and Information Science in Engineering

For more such blog posts visit Index page or click InnovationBuzz label.

…till next

post, bye-bye and take-care.